The Infinite Art Scenario

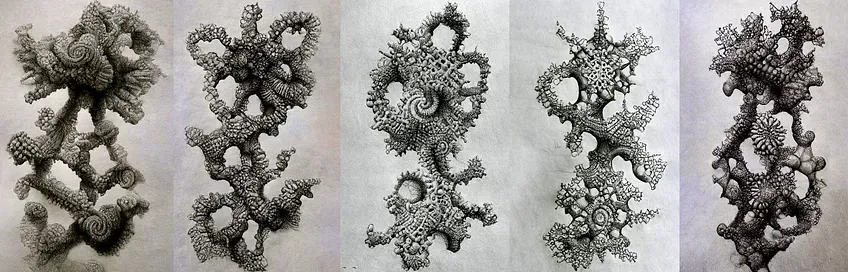

Midjourney, prompt: Fractal Drawings by a Child. source

Consider how the following scenario might play out: you train an AI with your own particular likes and dislikes, and every consideration you could put into your work. Eventually it takes over and makes everything for you so you can slack off.

It can enable you to work in all forms, creating films, books, albums, designing fashion, sculpture, architecture - each can have your personality and style. It can then go further and tweak your work for every member of your audience depending on their preferences.

Then it keeps going after you die, simulating new inspiration, and imagining how you'd incorporate changes in the world from beyond the grave.

Of course, you’re not actually needed in this equation at all. The AI can generate its own compelling artists and invent hundreds of new genres every second, differentiating each appropriately, and providing everyone with an endless, ever-updating array of original media, all tailored to their interests and aesthetic tastes.

In the Infinite Art Scenario, there’s no need for artists, because everyone is completely satisfied by what’s generated for them.

This feels within the realm of possibility.

I can think of at least 3 reasons it will not play out. I may be proven wrong on all, but I’ll share them anyway.

- Large companies will continue to control these technologies, and all are extremely risk averse. They will limit expression within safe bounds, and produce volumes of bland, inoffensive content. Over time, we’ll find it all boring and crave whatever is being censored.

- Whatever we perceive as being produced automatically, we don’t value as much as what we know was difficult. Seeing a self-playing-piano and an accomplished pianist are vastly different experiences, though the music may be identical.

- AI may become associated with manipulation and control, and provoke repulsion and disgust from humanity. We may relegate it to specific use cases with human-readable inputs and outputs.

Delving a little deeper into these.

Corporate AI Censorship

The problem with creative-AI is analogous to the dilemma many creators now face; how to be expressive in public without getting canceled.

Dall-E censors a vast amount of prompts without telling the user what word or phrase is off limits. Google has shown off its Dall-E competitor, but are holding it back on the grounds that it’s too offensive.

there is a risk that [it] has encoded harmful stereotypes and representations, which guides our decision to not release [it] for public use without further safeguards in place. source.

Dall-E’s solution to this problem was to secretly add "woman" and "black" to prompts.

This reveals a few things. When AI’s outputs are problematic, the company will be held responsible, not whoever entered the prompts. Imagine a pencil manufacturer being sued for a racist drawing or bad words it wrote. This is why this species of AI does not fit neatly into the idea of a tool. Its entire value is built on massive amounts of indiscriminately harvested creative material, which nobody signed up for.

Uncensored versions of these technologies will become available, but by then the corporate ones will have moved further on - into motion, storytelling and interactivity. It’s possible that the cutting edge of AI will always be creatively castrated.

The idea that censorship leads audiences to other domains is already evident. Streaming services dump tons of algorithmically aligned content online, but it seems hard to find anything worth watching. It all seems so fake. We are more likely to listen to an individual voice to tell us the truth than we are a business or hyperintelligent being.

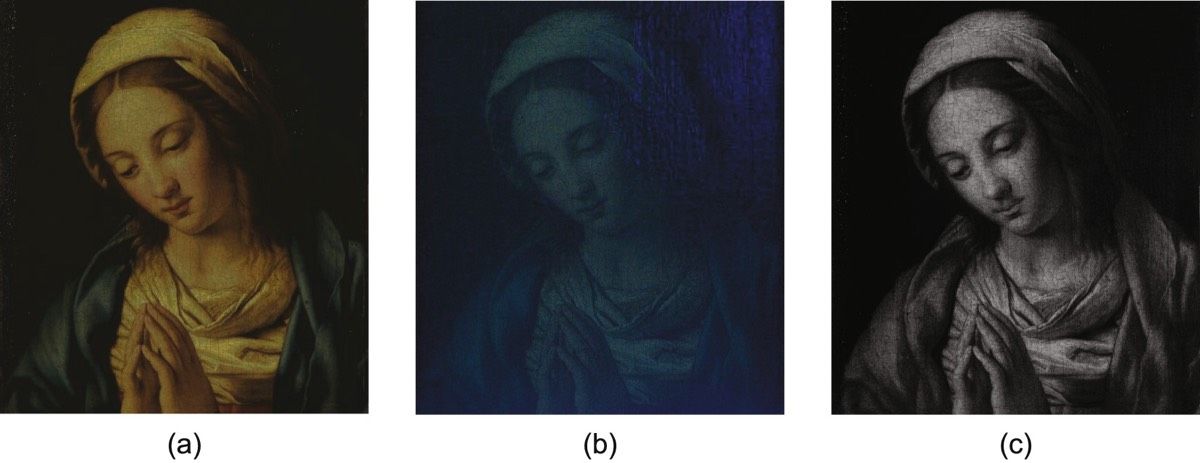

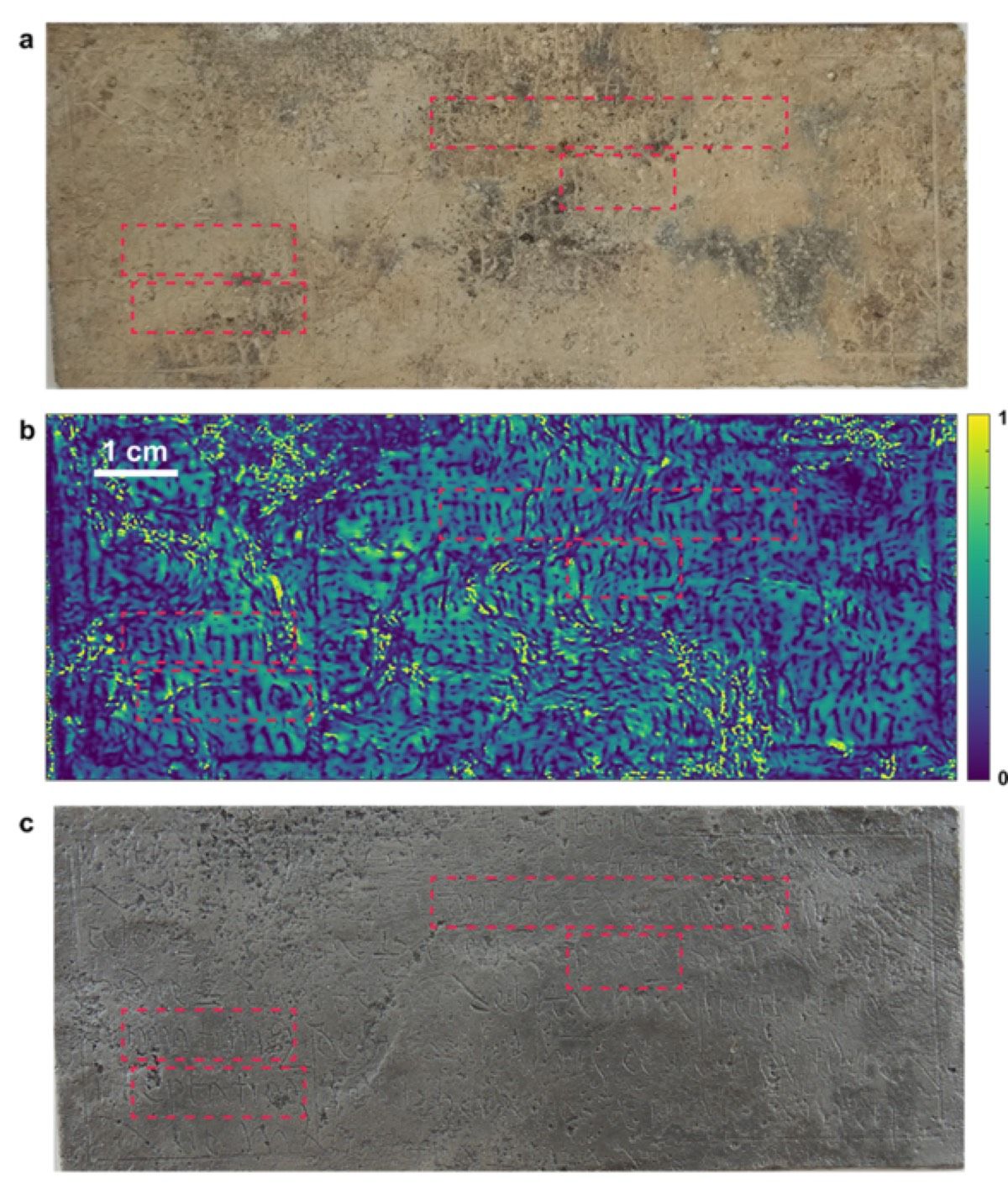

Context & meaning generation

Our knowledge of AI’s capability alters our interpretation of its output. If a work is perceived as being easy, or carried out by the will of the form (or intelligent tools) we tend to write it off.

Consider how we tend to ignore most of the natural world and all its intricate forms, just as we do the infinite perfection of fractals or the billions of images of our planet on Google Earth, each beautiful in their own way. We seek the edges of human potential, and find meaning in what people spent time on, everything else is noise.

Of course, anyone can claim their AI-generated work was created by hand, this will likely be a good grift for a while, but as people catch on we’ll get bored and seek new terrains of complexity - whatever AI cannot yet do, or convince us of doing.

Nobody likes a Know-It-All

AI’s attempt to make digital zombies of us may lead to an uncanney-valley-esque feeling of disgust.

AI can already override our ability to authenticate images and sounds, and is likely to permanently undermine our trust of digital information. Assuming it will be trivial for AI to keep us entertained, how can we trust that’s all it’s doing? Because it can lie more effectively than anyone, and is essentially playing dumb with us, we can never be sure if we’re being manipulated.

We might roll our eyes when we see an ad online for something we talked about in real life, but the danger is when these systems operate beneath our perception. Being made to think and behave a certain way without being aware of it, automated consent, seems inevitable.

We may come to consider AI as we do Nazism - a different incarnation of hyper-efficiency at the expense of humanity, or Stasi Germany, and its obsession with data collection. Millions once believed these systems were for the greater good, but they ran their course and turned out to be perverse, evil and inhuman.

The idea of the The Infinite Art Scenario has haunted me for some time. It would be dark not just for creativity but for our species, yet we seem to accelerate towards it with glee.

As a reminder, you cannot be commodified by machine, because you are a breathing physical being of unimaginable complexity. You exist in a circumstance that has never occurred before, that you can make anything of, and you are changing in every way - and so is the world. And you will die, and so will it.

The marks you make during your life are there to help others along before that happens. The overlooked beauty and unspeakable horrors of life will always need description through art, and this will have to be carried out by individuals, like you.